Dongxiao He, Lianze Shan, Jitao Zhao, Hengrui Zhang, Zhen Wang, Weixiong Zhang

rating : 8.75 - [9, 8, 9, 9] - Oral

Graph Contrastive Learning (GCL) has emerged as a powerful approach for generating graph representations without the need for manual annotation. Most advanced GCL methods fall into three main frameworks: node discrimination, group discrimination, and bootstrapping schemes, all of which achieve comparable performance. However, the underlying mechanisms and factors that contribute to their effectiveness are not yet fully understood. In this paper, we revisit these frameworks and reveal a common mechanism—representation scattering—that significantly enhances their performance. Our discovery highlights an essential feature of GCL and unifies these seemingly disparate methods under the concept of representation scattering. To leverage this insight, we introduce Scattering Graph Representation Learning (SGRL), a novel framework that incorporates a new representation scattering mechanism designed to enhance representation diversity through a center-away strategy. Additionally, consider the interconnected nature of graphs, we develop a topology-based constraint mechanism that integrates graph structural properties with representation scattering to prevent excessive scattering. We extensively evaluate SGRL across various downstream tasks on benchmark datasets, demonstrating its efficacy and superiority over existing GCL methods. Our findings underscore the significance of representation scattering in GCL and provide a structured framework for harnessing this mechanism to advance graph representation learning. The code of SGRL is at https://github.com/hedongxiao-tju/SGRL.

Felix Petersen, Hilde Kuehne, Christian Borgelt, Julian Welzel, Stefano Ermon

rating : 8.25 - [8, 9, 8, 8] - Oral

With the increasing inference cost of machine learning models, there is a growing interest in models with fast and efficient inference.

Recently, an approach for learning logic gate networks directly via a differentiable relaxation was proposed. Logic gate networks are faster than conventional neural network approaches because their inference only requires logic gate operators such as NAND, OR, and XOR, which are the underlying building blocks of current hardware and can be efficiently executed. We build on this idea, extending it by deep logic gate tree convolutions, logical OR pooling, and residual initializations. This allows scaling logic gate networks up by over one order of magnitude and utilizing the paradigm of convolution. On CIFAR-10, we achieve an accuracy of 86.29% using only 61 million logic gates, which improves over the SOTA while being 29x smaller.

Salva Rühling Cachay, Brian Henn, Oliver Watt-Meyer, Christopher S. Bretherton, Rose Yu

rating : 8.0 - [8, 8, 8, 8] - Spotlight

tl;dr: We present the first conditional generative model for efficient, probabilistic emulation of a realistic global climate model, which beats relevant baselines and nearly reaches a gold standard for successful climate model emulation.

Data-driven deep learning models are transforming global weather forecasting. It is an open question if this success can extend to climate modeling, where the complexity of the data and long inference rollouts pose significant challenges. Here, we present the first conditional generative model that produces accurate and physically consistent global climate ensemble simulations by emulating a coarse version of the United States' primary operational global forecast model, FV3GFS.

Our model integrates the dynamics-informed diffusion framework (DYffusion) with the Spherical Fourier Neural Operator (SFNO) architecture, enabling stable 100-year simulations at 6-hourly timesteps while maintaining low computational overhead compared to single-step deterministic baselines.

The model achieves near gold-standard performance for climate model emulation, outperforming existing approaches and demonstrating promising ensemble skill.

This work represents a significant advance towards efficient, data-driven climate simulations that can enhance our understanding of the climate system and inform adaptation strategies. Code is available at [https://github.com/Rose-STL-Lab/spherical-dyffusion](https://github.com/Rose-STL-Lab/spherical-dyffusion).

Xi Zhang, Yuan Pu, Yuki Kawamura, Andrew Loza, Yoshua Bengio, Dennis Shung, Alexander Tong

rating : 8.0 - [7, 10, 7] - Spotlight

tl;dr: Flow Matching for continuous stochastic modelling of time series data with applications to clinical time series

Modeling stochastic and irregularly sampled time series is a challenging problem found in a wide range of applications, especially in medicine. Neural stochastic differential equations (Neural SDEs) are an attractive modeling technique for this problem, which parameterize the drift and diffusion terms of an SDE with neural networks. However, current algorithms for training Neural SDEs require backpropagation through the SDE dynamics, greatly limiting their scalability and stability.

To address this, we propose **Trajectory Flow Matching** (TFM), which trains a Neural SDE in a *simulation-free* manner, bypassing backpropagation through the dynamics. TFM leverages the flow matching technique from generative modeling to model time series. In this work we first establish necessary conditions for TFM to learn time series data. Next, we present a reparameterization trick which improves training stability. Finally, we adapt TFM to the clinical time series setting, demonstrating improved performance on three clinical time series datasets both in terms of absolute performance and uncertainty prediction.

Maximilian Beck, Korbinian Pöppel, Markus Spanring, Andreas Auer, Oleksandra Prudnikova, Michael K Kopp, Günter Klambauer, Johannes Brandstetter, Sepp Hochreiter

rating : 8.0 - [7, 7, 10] - Spotlight

tl;dr: We extend the LSTM architecture with exponential gating and new memory structures and show that this new xLSTM performs favorably on large-scale language modeling tasks.

In the 1990s, the constant error carousel and gating were introduced as the central ideas of the Long Short-Term Memory (LSTM). Since then, LSTMs have stood the test of time and contributed to numerous deep learning success stories, in particular they constituted the first Large Language Models (LLMs). However, the advent of the Transformer technology with parallelizable self-attention at its core marked the dawn of a new era, outpacing LSTMs at scale. We now raise a simple question: How far do we get in language modeling when scaling LSTMs to billions of parameters, leveraging the latest techniques from modern LLMs, but mitigating known limitations of LSTMs? Firstly, we introduce exponential gating with appropriate normalization and stabilization techniques. Secondly, we modify the LSTM memory structure, obtaining: (i) sLSTM with a scalar memory, a scalar update, and new memory mixing, (ii) mLSTM that is fully parallelizable with a matrix memory and a covariance update rule. Integrating these LSTM extensions into residual block backbones yields xLSTM blocks that are then residually stacked into xLSTM architectures. Exponential gating and modified memory structures boost xLSTM capabilities to perform favorably when compared to state-of-the-art Transformers and State Space Models, both in performance and scaling.

Ilija Radosavovic, Jathushan Rajasegaran, Baifeng Shi, Bike Zhang, Sarthak Kamat, Koushil Sreenath, Trevor Darrell, Jitendra Malik

rating : 7.75 - [8, 7, 8, 8] - Spotlight

tl;dr: We cast real-world humanoid control as a next token prediction problem.

We cast real-world humanoid control as a next token prediction problem, akin to predicting the next word in language. Our model is a causal transformer trained via autoregressive prediction of sensorimotor sequences. To account for the multi-modal nature of the data, we perform prediction in a modality-aligned way, and for each input token predict the next token from the same modality. This general formulation enables us to leverage data with missing modalities, such as videos without actions. We train our model on a dataset of sequences from prior neural network policies, model-based controllers, motion capture, and YouTube videos of humans. We show that our model enables a real humanoid robot to walk in San Francisco zero-shot. Our model can transfer to the real world even when trained on only 27 hours of walking data, and can generalize to commands not seen during training. These findings suggest a promising path toward learning challenging real-world control tasks by generative modeling of sensorimotor sequences.

Keyu Tian, Yi Jiang, Zehuan Yuan, BINGYUE PENG, Liwei Wang

rating : 7.75 - [7, 8, 8, 8] - Oral

tl;dr: Our VAR (Visual AutoRegressive modeling), for the first time, makes GPT-style autoregressive models surpass Diffusion Transformers, and demonstrates Scaling Laws for image generation with solid evidence.

We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine "next-scale prediction" or "next-resolution prediction", diverging from the standard raster-scan "next-token prediction". This simple, intuitive methodology allows autoregressive (AR) transformers to learn visual distributions fast and generalize well: VAR, for the first time, makes GPT-style AR models surpass diffusion transformers in image generation. On ImageNet 256x256 benchmark, VAR significantly improve AR baseline by improving Frechet inception distance (FID) from 18.65 to 1.73, inception score (IS) from 80.4 to 350.2, with around 20x faster inference speed. It is also empirically verified that VAR outperforms the Diffusion Transformer (DiT) in multiple dimensions including image quality, inference speed, data efficiency, and scalability. Scaling up VAR models exhibits clear power-law scaling laws similar to those observed in LLMs, with linear correlation coefficients near -0.998 as solid evidence. VAR further showcases zero-shot generalization ability in downstream tasks including image in-painting, out-painting, and editing. These results suggest VAR has initially emulated the two important properties of LLMs: Scaling Laws and zero-shot task generalization. We have released all models and codes to promote the exploration of AR/VAR models for visual generation and unified learning.

Matthew Zurek, Yudong Chen

rating : 7.75 - [10, 7, 7, 7] - Oral

tl;dr: We resolve the span-based sample complexity of weakly communicating average reward MDPs and initiate the study of general multichain MDPs, obtaining minimax optimal bounds and uncovering improved horizon dependence for fixed discounted MDP instances.

We study the sample complexity of learning an $\varepsilon$-optimal policy in an average-reward Markov decision process (MDP) under a generative model. For weakly communicating MDPs, we establish the complexity bound $\widetilde{O}\left(SA\frac{\mathsf{H}}{\varepsilon^2} \right)$, where $\mathsf{H}$ is the span of the bias function of the optimal policy and $SA$ is the cardinality of the state-action space. Our result is the first that is minimax optimal (up to log factors) in all parameters $S,A,\mathsf{H}$, and $\varepsilon$, improving on existing work that either assumes uniformly bounded mixing times for all policies or has suboptimal dependence on the parameters. We also initiate the study of sample complexity in general (multichain) average-reward MDPs. We argue a new transient time parameter $\mathsf{B}$ is necessary, establish an $\widetilde{O}\left(SA\frac{\mathsf{B} + \mathsf{H}}{\varepsilon^2} \right)$ complexity bound, and prove a matching (up to log factors) minimax lower bound. Both results are based on reducing the average-reward MDP to a discounted MDP, which requires new ideas in the general setting. To optimally analyze this reduction, we develop improved bounds for $\gamma$-discounted MDPs, showing that $\widetilde{O}\left(SA\frac{\mathsf{H}}{(1-\gamma)^2\varepsilon^2} \right)$ and $\widetilde{O}\left(SA\frac{\mathsf{B} + \mathsf{H}}{(1-\gamma)^2\varepsilon^2} \right)$ samples suffice to learn $\varepsilon$-optimal policies in weakly communicating and in general MDPs, respectively. Both these results circumvent the well-known minimax lower bound of $\widetilde{\Omega}\left(SA\frac{1}{(1-\gamma)^3\varepsilon^2} \right)$ for $\gamma$-discounted MDPs, and establish a quadratic rather than cubic horizon dependence for a fixed MDP instance.

Yuxin Du, Fan BAI, Tiejun Huang, Bo Zhao

rating : 7.67 - [8, 7, 8] - Spotlight

tl;dr: We propose a foundation model for universal and interactive volumetric medical image segmentation, trained on the collected 90K unlabeled and 6K labeled data.

Precise image segmentation provides clinical study with instructive information. Despite the remarkable progress achieved in medical image segmentation, there is still an absence of a 3D foundation segmentation model that can segment a wide range of anatomical categories with easy user interaction. In this paper, we propose a 3D foundation segmentation model, named SegVol, supporting universal and interactive volumetric medical image segmentation. By scaling up training data to 90K unlabeled Computed Tomography (CT) volumes and 6K labeled CT volumes, this foundation model supports the segmentation of over 200 anatomical categories using semantic and spatial prompts. To facilitate efficient and precise inference on volumetric images, we design a zoom-out-zoom-in mechanism. Extensive experiments on 22 anatomical segmentation tasks verify that SegVol outperforms the competitors in 19 tasks, with improvements up to 37.24\% compared to the runner-up methods. We demonstrate the effectiveness and importance of specific designs by ablation study. We expect this foundation model can promote the development of volumetric medical image analysis. The model and code are publicly available at https://github.com/BAAI-DCAI/SegVol.

Zhenghao Lin, Zhibin Gou, Yeyun Gong, Xiao Liu, yelong shen, Ruochen Xu, Chen Lin, Yujiu Yang, Jian Jiao, Nan Duan, Weizhu Chen

rating : 7.67 - [9, 7, 7] - Oral

tl;dr: We introduce Selective Language Modeling (SLM), a method for token-level pretraining data selection.

Previous language model pre-training methods have uniformly applied a next-token prediction loss to all training tokens. Challenging this norm, we posit that ''Not all tokens in a corpus are equally important for language model training''. Our initial analysis examines token-level training dynamics of language model, revealing distinct loss patterns for different tokens. Leveraging these insights, we introduce a new language model called Rho-1. Unlike traditional LMs that learn to predict every next token in a corpus, Rho-1 employs Selective Language Modeling (SLM), which selectively trains on useful tokens that aligned with the desired distribution. This approach involves scoring training tokens using a reference model, and then training the language model with a focused loss on tokens with higher scores. When continual continual pretraining on 15B OpenWebMath corpus, Rho-1 yields an absolute improvement in few-shot accuracy of up to 30% in 9 math tasks. After fine-tuning, Rho-1-1B and 7B achieved state-of-the-art results of 40.6% and 51.8% on MATH dataset, respectively - matching DeepSeekMath with only 3% of the pretraining tokens. Furthermore, when continual pretraining on 80B general tokens, Rho-1 achieves 6.8% average enhancement across 15 diverse tasks, increasing both data efficiency and performance of the language model pre-training.

Sudeep Salgia, Yuejie Chi

rating : 7.67 - [7, 8, 8] - Oral

tl;dr: Characterization of Sample-Communication Trade-off in Federated Q-learning with both converse and achievability results

We consider the problem of Federated Q-learning, where $M$ agents aim to collaboratively learn the optimal Q-function of an unknown infinite horizon Markov Decision Process with finite state and action spaces. We investigate the trade-off between sample and communication complexity for the widely used class of intermittent communication algorithms. We first establish the converse result, where we show that any Federated Q-learning that offers a linear speedup with respect to number of agents in sample complexity needs to incur a communication cost of at least $\Omega(\frac{1}{1-\gamma})$, where $\gamma$ is the discount factor. We also propose a new Federated Q-learning algorithm, called Fed-DVR-Q, which is the first Federated Q-learning algorithm to simultaneously achieve order-optimal sample and communication complexities. Thus, together these results provide a complete characterization of the sample-communication complexity trade-off in Federated Q-learning.

Xizhou Zhu, Xue Yang, Zhaokai Wang, Hao Li, Wenhan Dou, Junqi Ge, Lewei Lu, Yu Qiao, Jifeng Dai

rating : 7.67 - [7, 7, 9] - Spotlight

tl;dr: We propose the Parameter-Inverted Image Pyramid Networks to address the computational challenges of traditional image pyramids.

Image pyramids are commonly used in modern computer vision tasks to obtain multi-scale features for precise understanding of images. However, image pyramids process multiple resolutions of images using the same large-scale model, which requires significant computational cost. To overcome this issue, we propose a novel network architecture known as the Parameter-Inverted Image Pyramid Networks (PIIP). Our core idea is to use models with different parameter sizes to process different resolution levels of the image pyramid, thereby balancing computational efficiency and performance. Specifically, the input to PIIP is a set of multi-scale images, where higher resolution images are processed by smaller networks. We further propose a feature interaction mechanism to allow features of different resolutions to complement each other and effectively integrate information from different spatial scales. Extensive experiments demonstrate that the PIIP achieves superior performance in tasks such as object detection, segmentation, and image classification, compared to traditional image pyramid methods and single-branch networks, while reducing computational cost. Notably, when applying our method on a large-scale vision foundation model InternViT-6B, we improve its performance by 1\%-2\% on detection and segmentation with only 40\%-60\% of the original computation. These results validate the effectiveness of the PIIP approach and provide a new technical direction for future vision computing tasks.

MohammadReza Ebrahimi, Jun Chen, Ashish J Khisti

rating : 7.67 - [8, 7, 8] - Spotlight

tl;dr: We present a novel lossy compression framework under log loss called Minimum Entropy Coupling with Bottleneck.

This paper investigates a novel lossy compression framework operating under logarithmic loss, designed to handle situations where the reconstruction distribution diverges from the source distribution. This framework is especially relevant for applications that require joint compression and retrieval, and in scenarios involving distributional shifts due to processing. We show that the proposed formulation extends the classical minimum entropy coupling framework by integrating a bottleneck, allowing for controlled variability in the degree of stochasticity in the coupling.

We explore the decomposition of the Minimum Entropy Coupling with Bottleneck (MEC-B) into two distinct optimization problems: Entropy-Bounded Information Maximization (EBIM) for the encoder, and Minimum Entropy Coupling (MEC) for the decoder. Through extensive analysis, we provide a greedy algorithm for EBIM with guaranteed performance, and characterize the optimal solution near functional mappings, yielding significant theoretical insights into the structural complexity of this problem.

Furthermore, we illustrated the practical application of MEC-B through experiments in Markov Coding Games (MCGs) under rate limits. These games simulate a communication scenario within a Markov Decision Process, where an agent must transmit a compressed message from a sender to a receiver through its actions. Our experiments highlighted the trade-offs between MDP rewards and receiver accuracy across various compression rates, showcasing the efficacy of our method compared to conventional compression baseline.

Ben Adcock, Nick Dexter, Sebastian Moraga

rating : 7.67 - [7, 8, 8] - Spotlight

tl;dr: We show that deep learning with fully-connected deep neural networks is optimal for learning holomorphic operators

Operator learning problems arise in many key areas of scientific computing where Partial Differential Equations (PDEs) are used to model physical systems. In such scenarios, the operators map between Banach or Hilbert spaces. In this work, we tackle the problem of learning operators between Banach spaces, in contrast to the vast majority of past works considering only Hilbert spaces. We focus on learning holomorphic operators -- an important class of problems with many applications. We combine arbitrary approximate encoders and decoders with standard feedforward Deep Neural Network (DNN) architectures -- specifically, those with constant width exceeding the depth -- under standard $\ell^2$-loss minimization. We first identify a family of DNNs such that the resulting Deep Learning (DL) procedure achieves optimal generalization bounds for such operators. For standard fully-connected architectures, we then show that there are uncountably many minimizers of the training problem that yield equivalent optimal performance. The DNN architectures we consider are `problem agnostic', with width and depth only depending on the amount of training data $m$ and not on regularity assumptions of the target operator. Next, we show that DL is optimal for this problem: no recovery procedure can surpass these generalization bounds up to log terms. Finally, we present numerical results demonstrating the practical performance on challenging problems including the parametric diffusion, Navier-Stokes-Brinkman and Boussinesq PDEs.

Haokun Lin, Haobo Xu, Yichen Wu, Jingzhi Cui, Yingtao Zhang, Linzhan Mou, Linqi Song, Zhenan Sun, Ying Wei

rating : 7.6 - [7, 8, 8, 7, 8] - Oral

tl;dr: We identify massive outliers in the down-projection layer of the FFN module and introduce DuQuant, which uses rotation and permutation transformations to effectively mitigate both massive and normal outliers.

Quantization of large language models (LLMs) faces significant challenges, particularly due to the presence of outlier activations that impede efficient low-bit representation. Traditional approaches predominantly address Normal Outliers, which are activations across all tokens with relatively large magnitudes. However, these methods struggle with smoothing Massive Outliers that display significantly larger values, which leads to significant performance degradation in low-bit quantization. In this paper, we introduce DuQuant, a novel approach that utilizes rotation and permutation transformations to more effectively mitigate both massive and normal outliers. First, DuQuant starts by constructing the rotation matrix, using specific outlier dimensions as prior knowledge, to redistribute outliers to adjacent channels by block-wise rotation. Second, We further employ a zigzag permutation to balance the distribution of outliers across blocks, thereby reducing block-wise variance. A subsequent rotation further smooths the activation landscape, enhancing model performance. DuQuant simplifies the quantization process and excels in managing outliers, outperforming the state-of-the-art baselines across various sizes and types of LLMs on multiple tasks, even with 4-bit weight-activation quantization. Our code is available at https://github.com/Hsu1023/DuQuant.

Maximilian Stölzle, Cosimo Della Santina

rating : 7.5 - [8, 7] - Spotlight

tl;dr: We leverage input-to-state stable coupled oscillator networks for conducting model-based control in latent space.

Even though a variety of methods have been proposed in the literature, efficient and effective latent-space control (i.e., control in a learned low-dimensional space) of physical systems remains an open challenge.

We argue that a promising avenue is to leverage powerful and well-understood closed-form strategies from control theory literature in combination with learned dynamics, such as potential-energy shaping.

We identify three fundamental shortcomings in existing latent-space models that have so far prevented this powerful combination: (i) they lack the mathematical structure of a physical system, (ii) they do not inherently conserve the stability properties of the real systems, (iii) these methods do not have an invertible mapping between input and latent-space forcing.

This work proposes a novel Coupled Oscillator Network (CON) model that simultaneously tackles all these issues.

More specifically, (i) we show analytically that CON is a Lagrangian system - i.e., it possesses well-defined potential and kinetic energy terms. Then, (ii) we provide formal proof of global Input-to-State stability using Lyapunov arguments.

Moving to the experimental side, we demonstrate that CON reaches SoA performance when learning complex nonlinear dynamics of mechanical systems directly from images.

An additional methodological innovation contributing to achieving this third goal is an approximated closed-form solution for efficient integration of network dynamics, which eases efficient training.

We tackle (iii) by approximating the forcing-to-input mapping with a decoder that is trained to reconstruct the input based on the encoded latent space force.

Finally, we leverage these three properties and show that they enable latent-space control. We use an integral-saturated PID with potential force compensation and demonstrate high-quality performance on a soft robot using raw pixels as the only feedback information.

Tianhong Li, Dina Katabi, Kaiming He

rating : 7.5 - [9, 8, 5, 8] - Oral

Unconditional generation -- the problem of modeling data distribution without relying on human-annotated labels -- is a long-standing and fundamental challenge in generative models, creating a potential of learning from large-scale unlabeled data. In the literature, the generation quality of an unconditional method has been much worse than that of its conditional counterpart. This gap can be attributed to the lack of semantic information provided by labels. In this work, we show that one can close this gap by generating semantic representations in the representation space produced by a self-supervised encoder. These representations can be used to condition the image generator. This framework, called Representation-Conditioned Generation (RCG), provides an effective solution to the unconditional generation problem without using labels. Through comprehensive experiments, we observe that RCG significantly improves unconditional generation quality: e.g., it achieves a new state-of-the-art FID of 2.15 on ImageNet 256x256, largely reducing the previous best of 5.91 by a relative 64%. Our unconditional results are situated in the same tier as the leading class-conditional ones. We hope these encouraging observations will attract the community's attention to the fundamental problem of unconditional generation. Code is available at [https://github.com/LTH14/rcg](https://github.com/LTH14/rcg).

Fanxu Meng, Zhaohui Wang, Muhan Zhang

rating : 7.5 - [8, 8, 7, 7] - Spotlight

tl;dr: PiSSA reinitializes LoRA's parameters by applying SVD to the base model, which enables faster convergence and ultimately superior performance. Additionally, it can reduce quantization error compared to QLoRA.

To parameter-efficiently fine-tune (PEFT) large language models (LLMs), the low-rank adaptation (LoRA) method approximates the model changes $\Delta W \in \mathbb{R}^{m \times n}$ through the product of two matrices $A \in \mathbb{R}^{m \times r}$ and $B \in \mathbb{R}^{r \times n}$, where $r \ll \min(m, n)$, $A$ is initialized with Gaussian noise, and $B$ with zeros. LoRA **freezes the original model $W$** and **updates the "Noise \& Zero" adapter**, which may lead to slow convergence. To overcome this limitation, we introduce **P**r**i**ncipal **S**ingular values and **S**ingular vectors **A**daptation (PiSSA). PiSSA shares the same architecture as LoRA, but initializes the adaptor matrices $A$ and $B$ with the principal components of the original matrix $W$, and put the remaining components into a residual matrix $W^{res} \in \mathbb{R}^{m \times n}$ which is frozen during fine-tuning.

Compared to LoRA, PiSSA **updates the principal components** while **freezing the "residual" parts**, allowing faster convergence and enhanced performance. Comparative experiments of PiSSA and LoRA across 11 different models, ranging from 184M to 70B, encompassing 5 NLG and 8 NLU tasks, reveal that PiSSA consistently outperforms LoRA under identical experimental setups. On the GSM8K benchmark, Gemma-7B fine-tuned with PiSSA achieves an accuracy of 77.7\%, surpassing LoRA's 74.53\% by 3.25\%. Due to the same architecture, PiSSA is also compatible with quantization to further reduce the memory requirement of fine-tuning. Compared to QLoRA, QPiSSA (PiSSA with 4-bit quantization) exhibits smaller quantization errors in the initial stages. Fine-tuning LLaMA-3-70B on GSM8K, QPiSSA attains an accuracy of 86.05\%, exceeding the performances of QLoRA at 81.73\%. Leveraging a fast SVD technique, PiSSA can be initialized in only a few seconds, presenting a negligible cost for transitioning from LoRA to PiSSA.

Vivek Narayanaswamy, Kowshik Thopalli, Rushil Anirudh, Yamen Mubarka, Wesam A. Sakla, Jayaraman J. Thiagarajan

rating : 7.5 - [8, 8, 7, 7] - Spotlight

Anchoring is a recent, architecture-agnostic principle for training deep neural networks that has been shown to significantly improve uncertainty estimation, calibration, and extrapolation capabilities. In this paper, we systematically explore anchoring as a general protocol for training vision models, providing fundamental insights into its training and inference processes and their implications for generalization and safety. Despite its promise, we identify a critical problem in anchored training that can lead to an increased risk of learning undesirable shortcuts, thereby limiting its generalization capabilities. To address this, we introduce a new anchored training protocol that employs a simple regularizer to mitigate this issue and significantly enhances generalization. We empirically evaluate our proposed approach across datasets and architectures of varying scales and complexities, demonstrating substantial performance gains in generalization and safety metrics compared to the standard training protocol. The open-source code is available at https://software.llnl.gov/anchoring.

Gongfan Fang, Hongxu Yin, Saurav Muralidharan, Greg Heinrich, Jeff Pool, Jan Kautz, Pavlo Molchanov, Xinchao Wang

rating : 7.5 - [7, 7, 8, 8] - Spotlight

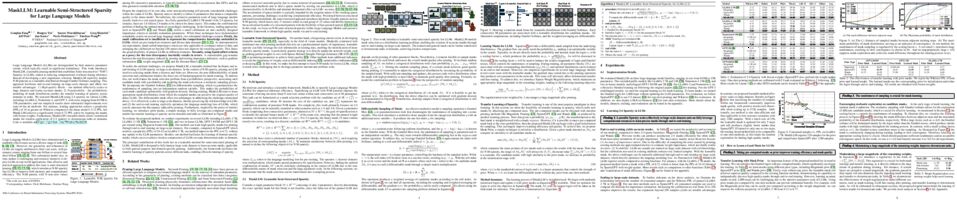

tl;dr: Learnable Semi-structured Sparsity for LLMs

Large Language Models (LLMs) are distinguished by their massive parameter counts, which typically result in significant redundancy. This work introduces MaskLLM, a learnable pruning method that establishes Semi-structured (or ``N:M'') Sparsity in LLMs, aimed at reducing computational overhead during inference. Instead of developing a new importance criterion, MaskLLM explicitly models N:M patterns as a learnable distribution through Gumbel Softmax sampling. This approach facilitates end-to-end training on large-scale datasets and offers two notable advantages: 1) High-quality Masks - our method effectively scales to large datasets and learns accurate masks; 2) Transferability - the probabilistic modeling of mask distribution enables the transfer learning of sparsity across domains or tasks. We assessed MaskLLM using 2:4 sparsity on various LLMs, including LLaMA-2, Nemotron-4, and GPT-3, with sizes ranging from 843M to 15B parameters, and our empirical results show substantial improvements over state-of-the-art methods. For instance, leading approaches achieve a perplexity (PPL) of 10 or greater on Wikitext compared to the dense model's 5.12 PPL, but MaskLLM achieves a significantly lower 6.72 PPL solely by learning the masks with frozen weights. Furthermore, MaskLLM's learnable nature allows customized masks for lossless application of 2:4 sparsity to downstream tasks or domains. Code is available at https://github.com/NVlabs/MaskLLM.

zhengrui Xu, Guan'an Wang, Xiaowen Huang, Jitao Sang

rating : 7.5 - [8, 6, 8, 8] - Oral

The denoising model has been proven a powerful generative model but has little exploration of discriminative tasks. Representation learning is important in discriminative tasks, which is defined as *"learning representations (or features) of the data that make it easier to extract useful information when building classifiers or other predictors"*. In this paper, we propose a novel Denoising Model for Representation Learning (*DenoiseRep*) to improve feature discrimination with joint feature extraction and denoising. *DenoiseRep* views each embedding layer in a backbone as a denoising layer, processing the cascaded embedding layers as if we are recursively denoise features step-by-step. This unifies the frameworks of feature extraction and denoising, where the former progressively embeds features from low-level to high-level, and the latter recursively denoises features step-by-step. After that, *DenoiseRep* fuses the parameters of feature extraction and denoising layers, and *theoretically demonstrates* its equivalence before and after the fusion, thus making feature denoising computation-free. *DenoiseRep* is a label-free algorithm that incrementally improves features but also complementary to the label if available. Experimental results on various discriminative vision tasks, including re-identification (Market-1501, DukeMTMC-reID, MSMT17, CUHK-03, vehicleID), image classification (ImageNet, UB200, Oxford-Pet, Flowers), object detection (COCO), image segmentation (ADE20K) show stability and impressive improvements. We also validate its effectiveness on the CNN (ResNet) and Transformer (ViT, Swin, Vmamda) architectures.

Rohan Alur, Manish Raghavan, Devavrat Shah

rating : 7.5 - [8, 7, 7, 8] - Oral

tl;dr: We introduce a novel framework for incorporating human judgment into algorithmic predictions; our approach focuses on the use of human judgment to distinguish inputs which ‘look the same’ to any feasible predictive algorithm.

We introduce a novel framework for incorporating human expertise into algorithmic predictions. Our approach leverages human judgment to distinguish inputs which are *algorithmically indistinguishable*, or "look the same" to predictive algorithms. We argue that this framing clarifies the problem of human-AI collaboration in prediction tasks, as experts often form judgments by drawing on information which is not encoded in an algorithm's training data. Algorithmic indistinguishability yields a natural test for assessing whether experts incorporate this kind of "side information", and further provides a simple but principled method for selectively incorporating human feedback into algorithmic predictions. We show that this method provably improves the performance of any feasible algorithmic predictor and precisely quantify this improvement. We find empirically that although algorithms often outperform their human counterparts *on average*, human judgment can improve algorithmic predictions on *specific* instances (which can be identified ex-ante). In an X-ray classification task, we find that this subset constitutes nearly 30% of the patient population. Our approach provides a natural way of uncovering this heterogeneity and thus enabling effective human-AI collaboration.

Rishabh Agarwal, Avi Singh, Lei M Zhang, Bernd Bohnet, Luis Rosias, Stephanie C.Y. Chan, Biao Zhang, Ankesh Anand, Zaheer Abbas, Azade Nova, John D Co-Reyes, Eric Chu, Feryal Behbahani, Aleksandra Faust, Hugo Larochelle

rating : 7.5 - [7, 7, 9, 7] - Spotlight

tl;dr: We investigate the many-shot in-context learning regime -- prompting large language models with hundreds or thousands of examples -- for a wide range of tasks.

Large language models (LLMs) excel at few-shot in-context learning (ICL) -- learning from a few examples provided in context at inference, without any weight updates. Newly expanded context windows allow us to investigate ICL with hundreds or thousands of examples – the many-shot regime. Going from few-shot to many-shot, we observe significant performance gains across a wide variety of generative and discriminative tasks. While promising, many-shot ICL can be bottlenecked by the available amount of human-generated outputs. To mitigate this limitation, we explore two new settings: (1) "Reinforced ICL" that uses model-generated chain-of-thought rationales in place of human rationales, and (2) "Unsupervised ICL" where we remove rationales from the prompt altogether, and prompts the model only with domain-specific inputs. We find that both Reinforced and Unsupervised ICL can be quite effective in the many-shot regime, particularly on complex reasoning tasks. We demonstrate that, unlike few-shot learning, many-shot learning is effective at overriding pretraining biases, can learn high-dimensional functions with numerical inputs, and performs comparably to supervised fine-tuning. Finally, we reveal the limitations of next-token prediction loss as an indicator of downstream ICL performance.

Jay Shah, Ganesh Bikshandi, Ying Zhang, Vijay Thakkar, Pradeep Ramani, Tri Dao

rating : 7.5 - [8, 7, 8, 7] - Spotlight

tl;dr: We speed up FlashAttention on modern GPUs (Hopper) with asynchrony and low-precision

Attention, as a core layer of the ubiquitous Transformer architecture, is the bottleneck for large language models and long-context applications. elaborated an approach to speed up attention on GPUs through minimizing memory reads/writes. However, it has yet to take advantage of new capabilities present in recent hardware, with FlashAttention-2 achieving only 35% utilization on the H100 GPU.

We develop three main techniques to speed up attention on Hopper GPUs: exploiting asynchrony of the Tensor Cores and TMA to (1) overlap overall computation and data movement via warp-specialization and (2) interleave block-wise matmul and softmax operations, and (3) block quantization and incoherent processing that leverages hardware support for FP8 low-precision. We demonstrate that our method, FlashAttention-3, achieves speedup on H100 GPUs by 1.5-2.0$\times$ with BF16 reaching up to 840 TFLOPs/s (85\% utilization), and with FP8 reaching 1.3 PFLOPs/s. We validate that FP8 FlashAttention-3 achieves 2.6$\times$ lower numerical error than a baseline FP8 attention.

Apolline Mellot, Antoine Collas, Sylvain Chevallier, Alexandre Gramfort, Denis Alexander Engemann

rating : 7.5 - [8, 8, 7, 7] - Spotlight

tl;dr: This paper proposes Geodesic Optimization for Predictive Shift Adaptation to address multi-source domain adaptation where source domains have distinct y distributions in the context of brain age prediction from EEG covariance matrices.

Electroencephalography (EEG) data is often collected from diverse contexts involving different populations and EEG devices. This variability can induce distribution shifts in the data $X$ and in the biomedical variables of interest $y$, thus limiting the application of supervised machine learning (ML) algorithms. While domain adaptation (DA) methods have been developed to mitigate the impact of these shifts, such methods struggle when distribution shifts occur simultaneously in $X$ and $y$. As state-of-the-art ML models for EEG represent the data by spatial covariance matrices, which lie on the Riemannian manifold of Symmetric Positive Definite (SPD) matrices, it is appealing to study DA techniques operating on the SPD manifold. This paper proposes a novel method termed Geodesic Optimization for Predictive Shift Adaptation (GOPSA) to address test-time multi-source DA for situations in which source domains have distinct $y$ distributions. GOPSA exploits the geodesic structure of the Riemannian manifold to jointly learn a domain-specific re-centering operator representing site-specific intercepts and the regression model. We performed empirical benchmarks on the cross-site generalization of age-prediction models with resting-state EEG data from a large multi-national dataset (HarMNqEEG), which included $14$ recording sites and more than $1500$ human participants. Compared to state-of-the-art methods, our results showed that GOPSA achieved significantly higher performance on three regression metrics ($R^2$, MAE, and Spearman's $\rho$) for several source-target site combinations, highlighting its effectiveness in tackling multi-source DA with predictive shifts in EEG data analysis. Our method has the potential to combine the advantages of mixed-effects modeling with machine learning for biomedical applications of EEG, such as multicenter clinical trials.

Aaron Defazio, Xingyu Alice Yang, Ahmed Khaled, Konstantin Mishchenko, Harsh Mehta, Ashok Cutkosky

rating : 7.5 - [7, 5, 8, 10] - Oral

tl;dr: Train without learning rate schedules

Existing learning rate schedules that do not require specification of the optimization stopping step $T$ are greatly out-performed by learning rate schedules that depend on $T$. We propose an approach that avoids the need for this stopping time by eschewing the use of schedules entirely, while exhibiting state-of-the-art performance compared to schedules across a wide family of problems ranging from convex problems to large-scale deep learning problems. Our Schedule-Free approach introduces no additional hyper-parameters over standard optimizers with momentum. Our method is a direct consequence of a new theory we develop that unifies scheduling and iterate averaging. An open source implementation of our method is available at https://github.com/facebookresearch/schedule_free. Schedule-Free AdamW is the core algorithm behind our winning entry to the MLCommons 2024 AlgoPerf Algorithmic Efficiency Challenge Self-Tuning track.

Luke Eilers, Raoul-Martin Memmesheimer, Sven Goedeke

rating : 7.5 - [8, 8, 8, 6] - Spotlight

tl;dr: We derive a generalized neural tangent kernel that describes surrogate gradient learning.

State-of-the-art neural network training methods depend on the gradient of the network function. Therefore, they cannot be applied to networks whose activation functions do not have useful derivatives, such as binary and discrete-time spiking neural networks. To overcome this problem, the activation function's derivative is commonly substituted with a surrogate derivative, giving rise to surrogate gradient learning (SGL). This method works well in practice but lacks theoretical foundation.

The neural tangent kernel (NTK) has proven successful in the analysis of gradient descent. Here, we provide a generalization of the NTK, which we call the surrogate gradient NTK, that enables the analysis of SGL. First, we study a naive extension of the NTK to activation functions with jumps, demonstrating that gradient descent for such activation functions is also ill-posed in the infinite-width limit. To address this problem, we generalize the NTK to gradient descent with surrogate derivatives, i.e., SGL. We carefully define this generalization and expand the existing key theorems on the NTK with mathematical rigor. Further, we illustrate our findings with numerical experiments. Finally, we numerically compare SGL in networks with sign activation function and finite width to kernel regression with the surrogate gradient NTK; the results confirm that the surrogate gradient NTK provides a good characterization of SGL.

Yizuo Chen, Adnan Darwiche

rating : 7.5 - [7, 8, 7, 8] - Spotlight

tl;dr: The paper studies the identifiability of causal effects under functional dependencies.

We study the identification of causal effects, motivated by two improvements to identifiability which can be attained if one knows that some variables in a causal graph are functionally determined by their parents (without needing to know the specific functions). First, an unidentifiable causal effect may become identifiable when certain variables are functional. Second, certain functional variables can be excluded from being observed without affecting the identifiability of a causal effect, which may significantly reduce the number of needed variables in observational data. Our results are largely based on an elimination procedure which removes functional variables from a causal graph while preserving key properties in the resulting causal graph, including the identifiability of causal effects.

Veeti Ahvonen, Damian Heiman, Antti Kuusisto, Carsten Lutz

rating : 7.5 - [8, 8, 8, 6] - Poster

tl;dr: The paper provides logical characterizations of recurrent graph neural network models.

In pioneering work from 2019, Barceló and coauthors identified logics that precisely match the expressive power of constant iteration-depth graph neural networks (GNNs) relative to properties definable in first-order logic. In this article, we give exact logical characterizations of recurrent GNNs in two scenarios: (1) in the setting with floating-point numbers and (2) with reals. For floats, the formalism matching recurrent GNNs is a rule-based modal logic with counting, while for reals we use a suitable infinitary modal logic, also with counting. These results give exact matches between logics and GNNs in the recurrent setting without relativising to a background logic in either case, but using some natural assumptions about floating-point arithmetic. Applying our characterizations, we also prove that, relative to graph properties definable in monadic second-order logic (MSO), our infinitary and rule-based logics are equally expressive. This implies that recurrent GNNs with reals and floats have the same expressive power over MSO-definable properties and shows that, for such properties, also recurrent GNNs with reals are characterized by a (finitary!) rule-based modal logic. In the general case, in contrast, the expressive power with floats is weaker than with reals. In addition to logic-oriented results, we also characterize recurrent GNNs, with both reals and floats, via distributed automata, drawing links to distributed computing models.

Michael Luo, Justin Wong, Brandon Trabucco, Yanping Huang, Joseph E. Gonzalez, Zhifeng Chen, Russ Salakhutdinov, Ion Stoica

rating : 7.5 - [7, 7, 9, 7] - Oral

tl;dr: Stylus automatically selects and composes adapters from a vast database of adapters for diffusion-based models.

Beyond scaling base models with more data or parameters, fine-tuned adapters provide an alternative way to generate high fidelity, custom images at reduced costs. As such, adapters have been widely adopted by open-source communities, accumulating a database of over 100K adapters—most of which are highly customized with insufficient descriptions. To generate high quality images, this paper explores the problem of matching the prompt to a Stylus of relevant adapters, built on recent work that highlight the performance gains of composing adapters. We introduce Stylus, which efficiently selects and automatically composes task-specific adapters based on a prompt's keywords. Stylus outlines a three-stage approach that first summarizes adapters with improved descriptions and embeddings, retrieves relevant adapters, and then further assembles adapters based on prompts' keywords by checking how well they fit the prompt. To evaluate Stylus, we developed StylusDocs, a curated dataset featuring 75K adapters with pre-computed adapter embeddings. In our evaluation on popular Stable Diffusion checkpoints, Stylus achieves greater CLIP/FID Pareto efficiency and is twice as preferred, with humans and multimodal models as evaluators, over the base model.

Lianmin Zheng, Liangsheng Yin, Zhiqiang Xie, Chuyue Sun, Jeff Huang, Cody Hao Yu, Shiyi Cao, Christos Kozyrakis, Ion Stoica, Joseph E. Gonzalez, Clark Barrett, Ying Sheng

rating : 7.4 - [8, 7, 8, 7, 7] - Poster

tl;dr: We introduce a system to simplify the programming and accelerate the execution of complex structured language model programs.

Large language models (LLMs) are increasingly used for complex tasks that require multiple generation calls, advanced prompting techniques, control flow, and structured inputs/outputs. However, efficient systems are lacking for programming and executing these applications. We introduce SGLang, a system for efficient execution of complex language model programs. SGLang consists of a frontend language and a runtime. The frontend simplifies programming with primitives for generation and parallelism control. The runtime accelerates execution with novel optimizations like RadixAttention for KV cache reuse and compressed finite state machines for faster structured output decoding. Experiments show that SGLang achieves up to $6.4\times$ higher throughput compared to state-of-the-art inference systems on various large language and multi-modal models on tasks including agent control, logical reasoning, few-shot learning benchmarks, JSON decoding, retrieval-augmented generation pipelines, and multi-turn chat. The code is publicly available at https://github.com/sgl-project/sglang.

Joongkyu Lee, Min-hwan Oh

rating : 7.4 - [7, 7, 9, 7, 7] - Poster

tl;dr: In this paper, we investigate an adversarial contextual multinomial logit (MNL) bandit problem and establish minimax optimal lower and upper regret bounds.

In this paper, we study the contextual multinomial logit (MNL) bandit problem in which a learning agent sequentially selects an assortment based on contextual information, and user feedback follows an MNL choice model.

There has been a significant discrepancy between lower and upper regret bounds, particularly regarding the maximum assortment size $K$. Additionally, the variation in reward structures between these bounds complicates the quest for optimality. Under uniform rewards, where all items have the same expected reward, we establish a regret lower bound of $\Omega(d\sqrt{\smash[b]{T/K}})$ and propose a constant-time algorithm, OFU-MNL+, that achieves a matching upper bound of $\tilde{\mathcal{O}}(d\sqrt{\smash[b]{T/K}})$.

We also provide instance-dependent minimax regret bounds under uniform rewards.

Under non-uniform rewards, we prove a lower bound of $\Omega(d\sqrt{T})$ and an upper bound of $\tilde{\mathcal{O}}(d\sqrt{T})$, also achievable by OFU-MNL+. Our empirical studies support these theoretical findings. To the best of our knowledge, this is the first work in the contextual MNL bandit literature to prove minimax optimality --- for either uniform or non-uniform reward setting --- and to propose a computationally efficient algorithm that achieves this optimality up to logarithmic factors.

Lanqing Li, Hai Zhang, Xinyu Zhang, Shatong Zhu, Yang YU, Junqiao Zhao, Pheng-Ann Heng

rating : 7.33 - [8, 7, 7] - Spotlight

tl;dr: We propose a novel information theoretic framework of the context-based offline meta-RL paradigm, which unifies several mainstream methods and leads to two robust algorithm implementations.

As a marriage between offline RL and meta-RL, the advent of offline meta-reinforcement learning (OMRL) has shown great promise in enabling RL agents to multi-task and quickly adapt while acquiring knowledge safely. Among which, context-based OMRL (COMRL) as a popular paradigm, aims to learn a universal policy conditioned on effective task representations. In this work, by examining several key milestones in the field of COMRL, we propose to integrate these seemingly independent methodologies into a unified framework. Most importantly, we show that the pre-existing COMRL algorithms are essentially optimizing the same mutual information objective between the task variable $M$ and its latent representation $Z$ by implementing various approximate bounds. Such theoretical insight offers ample design freedom for novel algorithms. As demonstrations, we propose a supervised and a self-supervised implementation of $I(Z; M)$, and empirically show that the corresponding optimization algorithms exhibit remarkable generalization across a broad spectrum of RL benchmarks, context shift scenarios, data qualities and deep learning architectures. This work lays the information theoretic foundation for COMRL methods, leading to a better understanding of task representation learning in the context of reinforcement learning.

Yue Liu, Yunjie Tian, Yuzhong Zhao, Hongtian Yu, Lingxi Xie, Yaowei Wang, Qixiang Ye, Jianbin Jiao, Yunfan Liu

rating : 7.33 - [7, 8, 7] - Spotlight

tl;dr: This paper introduces a new architecture with promising performance on visual perception tasks, which is based on mamba.

Designing computationally efficient network architectures remains an ongoing necessity in computer vision. In this paper, we adapt Mamba, a state-space language model, into VMamba, a vision backbone with linear time complexity. At the core of VMamba is a stack of Visual State-Space (VSS) blocks with the 2D Selective Scan (SS2D) module. By traversing along four scanning routes, SS2D bridges the gap between the ordered nature of 1D selective scan and the non-sequential structure of 2D vision data, which facilitates the collection of contextual information from various sources and perspectives. Based on the VSS blocks, we develop a family of VMamba architectures and accelerate them through a succession of architectural and implementation enhancements. Extensive experiments demonstrate VMamba’s

promising performance across diverse visual perception tasks, highlighting its superior input scaling efficiency compared to existing benchmark models. Source code is available at https://github.com/MzeroMiko/VMamba

Adhyyan Narang, Andrew Wagenmaker, Lillian J. Ratliff, Kevin Jamieson

rating : 7.33 - [8, 7, 7] - Spotlight

In this paper, we study the non-asymptotic sample complexity for the pure exploration problem in contextual bandits and tabular reinforcement learning (RL): identifying an $\epsilon$-optimal policy from a set of policies $\Pi$ with high probability. Existing work in bandits has shown that it is possible to identify the best policy by estimating only the *difference* between the behaviors of individual policies–which can have substantially lower variance than estimating the behavior of each policy directly—yet the best-known complexities in RL fail to take advantage of this, and instead estimate the behavior of each policy directly. Does it suffice to estimate only the differences in the behaviors of policies in RL? We answer this question positively for contextual bandits, but in the negative for tabular RL, showing a separation between contextual bandits and RL. However, inspired by this, we show that it *almost* suffices to estimate only the differences in RL: if we can estimate the behavior of a *single* reference policy, it suffices to only estimate how any other policy deviates from this reference policy. We develop an algorithm which instantiates this principle and obtains, to the best of our knowledge, the tightest known bound on the sample complexity of tabular RL.

Xin Chen, Anderson Ye Zhang

rating : 7.33 - [6, 8, 8] - Oral

tl;dr: We study clustering in anisotropic Gaussian Mixture Models by establishing minimax bounds and introducing a variant of Lloyd’s algorithm that achieves the minimax optimality provably.

We study clustering under anisotropic Gaussian Mixture Models (GMMs), where covariance matrices from different clusters are unknown and are not necessarily the identity matrix. We analyze two anisotropic scenarios: homogeneous, with identical covariance matrices, and heterogeneous, with distinct matrices per cluster. For these models, we derive minimax lower bounds that illustrate the critical influence of covariance structures on clustering accuracy. To solve the clustering problem, we consider a variant of Lloyd's algorithm, adapted to estimate and utilize covariance information iteratively. We prove that the adjusted algorithm not only achieves the minimax optimality but also converges within a logarithmic number of iterations, thus bridging the gap between theoretical guarantees and practical efficiency.

Peng Wang, Songshuo Lu, Yaohua Tang, Sijie Yan, Wei Xia, Yuanjun Xiong

rating : 7.33 - [6, 8, 8] - Poster

tl;dr: This work formalizes the problem of full-duplex voice conversation with LLM and presents a method towards this goal.

We present a generative dialogue system capable of operating in a full-duplex manner, allowing for seamless interaction. It is based on a large language model (LLM) carefully aligned to be aware of a perception module, a motor function module, and the concept of a simple finite state machine (called neural FSM) with two states. The perception and motor function modules operate in tandem, allowing the system to speak and listen to the user simultaneously. The LLM generates textual tokens for inquiry responses and makes autonomous decisions to start responding to, wait for, or interrupt the user by emitting control tokens to the neural FSM. All these tasks of the LLM are carried out as next token prediction on a serialized view of the dialogue in real-time. In automatic quality evaluations simulating real-life interaction, the proposed system reduces the average conversation response latency by more than threefold compared with LLM-based half-duplex dialogue systems while responding within less than 500 milliseconds in more than 50% of evaluated interactions. Running an LLM with only 8 billion parameters, our system exhibits an 8% higher interruption precision rate than the best available commercial LLM for voice-based dialogue.

Alex Oesterling, Claudio Mayrink Verdun, Alexander Glynn, Carol Xuan Long, Lucas Monteiro Paes, Sajani Vithana, Martina Cardone, Flavio Calmon

rating : 7.33 - [6, 9, 7] - Poster

tl;dr: We introduce a novel metric for ensuring multi-group proportional representation over sets of images. We apply this metric to retrieval and propose an algorithm that maximizes similarity under a multi-group proportional representation constraint.

Image search and retrieval tasks can perpetuate harmful stereotypes, erase cultural identities, and amplify social disparities. Current approaches to mitigate these representational harms balance the number of retrieved items across population groups defined by a small number of (often binary) attributes. However, most existing methods overlook intersectional groups determined by combinations of

group attributes, such as gender, race, and ethnicity. We introduce Multi-Group Proportional Representation (MPR), a novel metric that measures representation across intersectional groups. We develop practical methods for estimating MPR, provide theoretical guarantees, and propose optimization algorithms to ensure MPR in retrieval. We demonstrate that existing methods optimizing for equal and proportional representation metrics may fail to promote MPR. Crucially, our work shows that optimizing MPR yields more proportional representation across multiple intersectional groups specified by a rich function class, often with minimal compromise in retrieval accuracy. Code is provided at https://github.com/alex-oesterling/multigroup-proportional-representation.

Yiwen Qiu, Yujia Zheng, Kun Zhang

rating : 7.33 - [8, 8, 6] - Poster

tl;dr: This paper addresses the issue of subtask discovery from a causal perspective in Imitation Learning by identifying subgoals as selections

When solving long-horizon tasks, it is intriguing to decompose the high-level task into subtasks. Decomposing experiences into reusable subtasks can improve data efficiency, accelerate policy generalization, and in general provide promising solutions to multi-task reinforcement learning and imitation learning problems. However, the concept of subtasks is not sufficiently understood and modeled yet, and existing works often overlook the true structure of the data generation process: subtasks are the results of a *selection* mechanism on actions, rather than possible underlying confounders or intermediates. Specifically, we provide a theory to identify, and experiments to verify the existence of selection variables in such data. These selections serve as subgoals that indicate subtasks and guide policy. In light of this idea, we develop a sequential non-negative matrix factorization (seq- NMF) method to learn these subgoals and extract meaningful behavior patterns as subtasks. Our empirical results on a challenging Kitchen environment demonstrate that the learned subtasks effectively enhance the generalization to new tasks in multi-task imitation learning scenarios. The codes are provided at this [*link*](https://anonymous.4open.science/r/Identifying\_Selections\_for\_Unsupervised\_Subtask\_Discovery/README.md).

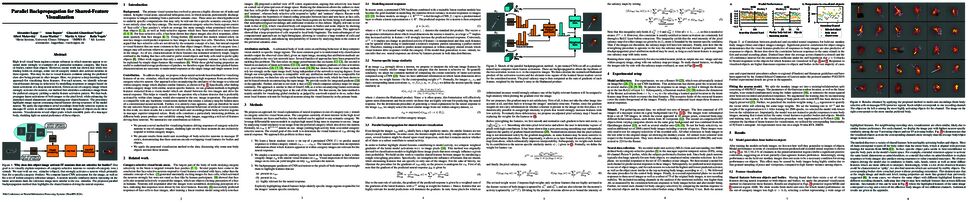

Leon Lufkin, Andrew M Saxe, Erin Grant

rating : 7.33 - [7, 8, 7] - Spotlight

tl;dr: We analyze the dynamics of emergent localization in receptive fields of a nonlinear neural network, which enables us to bind emergence to specific higher-order statistical properties of the input data.

Localized receptive fields—neurons that are selective for certain contiguous spatiotemporal features of their input—populate early sensory regions of the mammalian brain. Unsupervised learning algorithms that optimize explicit sparsity or independence criteria replicate features of these localized receptive fields, but fail to explain directly how localization arises through learning without efficient coding, as occurs in early layers of deep neural networks and might occur in early sensory regions of biological systems. We consider an alternative model in which localized receptive fields emerge without explicit top-down efficiency constraints—a feed-forward neural network trained on a data model inspired by the structure of natural images. Previous work identified the importance of non-Gaussian statistics to localization in this setting but left open questions about the mechanisms driving dynamical emergence. We address these questions by deriving the effective learning dynamics for a single nonlinear neuron, making precise how higher-order statistical properties of the input data drive emergent localization, and we demonstrate that the predictions of these effective dynamics extend to the many-neuron setting. Our analysis provides an alternative explanation for the ubiquity of localization as resulting from the nonlinear dynamics of learning in neural circuits

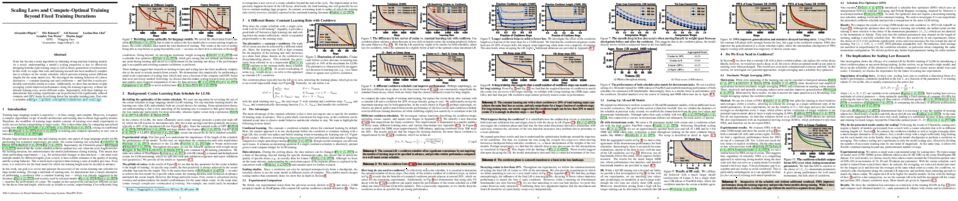

Alexander Hägele, Elie Bakouch, Atli Kosson, Loubna Ben allal, Leandro Von Werra, Martin Jaggi

rating : 7.33 - [8, 9, 5] - Spotlight

tl;dr: We show reliable scaling behavior of an alternative LR schedule as well as stochastic weight averaging for LLM training, thereby making scaling law experiments more accessible.

Scale has become a main ingredient in obtaining strong machine learning models. As a result, understanding a model's scaling properties is key to effectively designing both the right training setup as well as future generations of architectures. In this work, we argue that scale and training research has been needlessly complex due to reliance on the cosine schedule, which prevents training across different lengths for the same model size. We investigate the training behavior of a direct alternative --- constant learning rate and cooldowns --- and find that it scales predictably and reliably similar to cosine. Additionally, we show that stochastic weight averaging yields improved performance along the training trajectory, without additional training costs, across different scales. Importantly, with these findings we demonstrate that scaling experiments can be performed with significantly reduced compute and GPU hours by utilizing fewer but reusable training runs. Our code is available at https://github.com/epfml/schedules-and-scaling/.

Songlin Yang, Bailin Wang, Yu Zhang, Yikang Shen, Yoon Kim

rating : 7.33 - [7, 8, 7] - Poster

tl;dr: DeltaNet, i.e., Linear Transformer with delta rule, suffers from slow recurrent training. This work develops a novel algorithm that enables the parallelization of training over sequence length, allowing for large-scale experiments

Transformers with linear attention (i.e., linear transformers) and state-space models have recently been suggested as a viable linear-time alternative to transformers with softmax attention. However, these models still underperform transformers especially on tasks that require in-context retrieval. While more expressive variants of linear transformers which replace the additive update in linear transformers with the delta rule (DeltaNet) have been found to be more effective at associative recall, existing algorithms for training such models do not parallelize over sequence length and are thus inefficient to train on modern hardware. This work describes a hardware-efficient algorithm for training linear transformers with the delta rule, which exploits a memory-efficient representation for computing products of Householder matrices. This algorithm allows us to scale up DeltaNet to standard language modeling settings. We train a 1.3B model for 100B tokens and find that it outperforms recent linear-time baselines such as Mamba and GLA in terms of perplexity and zero-shot performance on downstream tasks. We also experiment with two hybrid models which combine DeltaNet layers with (1) sliding-window attention layers every other layer or (2) two global attention layers, and find that these hybrids outperform strong transformer baselines.

Ziheng Chen, Yue Song, Rui Wang, Xiaojun Wu, Nicu Sebe

rating : 7.33 - [8, 7, 7] - Poster

tl;dr: We propose a general framework of building intrinsic Riemannian classifiers for general geometries , and showcase our framework on the SPD manifold and special orthogonal group.

Riemannian neural networks, which extend deep learning techniques to Riemannian spaces, have gained significant attention in machine learning. To better classify the manifold-valued features, researchers have started extending Euclidean multinomial logistic regression (MLR) into Riemannian manifolds. However, existing approaches suffer from limited applicability due to their strong reliance on specific geometric properties. This paper proposes a framework for designing Riemannian MLR over general geometries, referred to as RMLR. Our framework only requires minimal geometric properties, thus exhibiting broad applicability and enabling its use with a wide range of geometries. Specifically, we showcase our framework on the Symmetric Positive Definite (SPD) manifold and special orthogonal group, i.e., the set of rotation matrices. On the SPD manifold, we develop five families of SPD MLRs under five types of power-deformed metrics. On rotation matrices we propose Lie MLR based on the popular bi-invariant metric. Extensive experiments on different Riemannian backbone networks validate the effectiveness of our framework.

Christoph Jansen, Georg Schollmeyer, Julian Rodemann, Hannah Blocher, Thomas Augustin

rating : 7.33 - [7, 8, 7] - Spotlight

tl;dr: We propose the GSD-front for reliable multicriteria benchmarking of classifiers, give conditions for its consistent estimability, propose (robust) statistical tests for checking if a classifier is contained, and illustrate it on two benchmark suites.

Given the vast number of classifiers that have been (and continue to be) proposed, reliable methods for comparing them are becoming increasingly important. The desire for reliability is broken down into three main aspects: (1) Comparisons should allow for different quality metrics simultaneously. (2) Comparisons should take into account the statistical uncertainty induced by the choice of benchmark suite. (3) The robustness of the comparisons under small deviations in the underlying assumptions should be verifiable. To address (1), we propose to compare classifiers using a generalized stochastic dominance ordering (GSD) and present the GSD-front as an information-efficient alternative to the classical Pareto-front. For (2), we propose a consistent statistical estimator for the GSD-front and construct a statistical test for whether a (potentially new) classifier lies in the GSD-front of a set of state-of-the-art classifiers. For (3), we relax our proposed test using techniques from robust statistics and imprecise probabilities. We illustrate our concepts on the benchmark suite PMLB and on the platform OpenML.

Arthur da Cunha, Mikael Møller Høgsgaard, Kasper Green Larsen

rating : 7.33 - [7, 7, 8] - Oral

tl;dr: We settle the parallel complexity of Boosting algorithms that are nearly sample-optimal

Recent works on the parallel complexity of Boosting have established strong lower bounds on the tradeoff between the number of training rounds $p$ and the total parallel work per round $t$.

These works have also presented highly non-trivial parallel algorithms that shed light on different regions of this tradeoff.

Despite these advancements, a significant gap persists between the theoretical lower bounds and the performance of these algorithms across much of the tradeoff space.

In this work, we essentially close this gap by providing both improved lower bounds on the parallel complexity of weak-to-strong learners, and a parallel Boosting algorithm whose performance matches these bounds across the entire $p$ vs. $t$ compromise spectrum, up to logarithmic factors.

Ultimately, this work settles the parallel complexity of Boosting algorithms that are nearly sample-optimal.

Baojian Zhou, Yifan Sun, Reza Babanezhad Harikandeh, Xingzhi Guo, Deqing Yang, Yanghua Xiao

rating : 7.33 - [7, 9, 6] - Poster

tl;dr: This paper introduces a novel approach to efficiently solve sparse linear systems by localizing standard iterative solvers using a locally evolving set process.

Given the damping factor $\alpha$ and precision tolerance $\epsilon$, \citet{andersen2006local} introduced Approximate Personalized PageRank (APPR), the \textit{de facto local method} for approximating the PPR vector, with runtime bounded by $\Theta(1/(\alpha\epsilon))$ independent of the graph size. Recently, Fountoulakis \& Yang asked whether faster local algorithms could be developed using $\tilde{\mathcal{O}}(1/(\sqrt{\alpha}\epsilon))$ operations. By noticing that APPR is a local variant of Gauss-Seidel, this paper explores the question of *whether standard iterative solvers can be effectively localized*. We propose to use the *locally evolving set process*, a novel framework to characterize the algorithm locality, and demonstrate that many standard solvers can be effectively localized. Let $\overline{\operatorname{vol}}{ (\mathcal S_t)}$ and $\overline{\gamma_t}$ be the running average of volume and the residual ratio of active nodes $\textstyle \mathcal{S_t}$ during the process. We show $\overline{\operatorname{vol}}{ (\mathcal S_t)}/\overline{\gamma_t} \leq 1/\epsilon$ and prove APPR admits a new runtime bound $\tilde{\mathcal{O}}(\overline{\operatorname{vol}}(\mathcal S_t)/(\alpha\overline{\gamma_t}))$ mirroring the actual performance. Furthermore, when the geometric mean of residual reduction is $\Theta(\sqrt{\alpha})$, then there exists $c \in (0,2)$ such that the local Chebyshev method has runtime $\tilde{\mathcal{O}}(\overline{\operatorname{vol}}(\mathcal{S_t})/(\sqrt{\alpha}(2-c)))$ without the monotonicity assumption. Numerical results confirm the efficiency of this novel framework and show up to a hundredfold speedup over corresponding standard solvers on real-world graphs.

Zixuan Gong, Guangyin Bao, Qi Zhang, Zhongwei Wan, Duoqian Miao, Shoujin Wang, Lei Zhu, Changwei Wang, Rongtao Xu, Liang Hu, Ke Liu, Yu Zhang

rating : 7.33 - [7, 8, 7] - Oral

tl;dr: This paper proposed NeuroClips, a new state-of-the-art fMRI-to-video reconstruction framework, achieving smooth high-fidelity video reconstruction of up to 6s at 8FPS.

Reconstruction of static visual stimuli from non-invasion brain activity fMRI achieves great success, owning to advanced deep learning models such as CLIP and Stable Diffusion. However, the research on fMRI-to-video reconstruction remains limited since decoding the spatiotemporal perception of continuous visual experiences is formidably challenging. We contend that the key to addressing these challenges lies in accurately decoding both high-level semantics and low-level perception flows, as perceived by the brain in response to video stimuli. To the end, we propose NeuroClips, an innovative framework to decode high-fidelity and smooth video from fMRI. NeuroClips utilizes a semantics reconstructor to reconstruct video keyframes, guiding semantic accuracy and consistency, and employs a perception reconstructor to capture low-level perceptual details, ensuring video smoothness. During inference, it adopts a pre-trained T2V diffusion model injected with both keyframes and low-level perception flows for video reconstruction. Evaluated on a publicly available fMRI-video dataset, NeuroClips achieves smooth high-fidelity video reconstruction of up to 6s at 8FPS, gaining significant improvements over state-of-the-art models in various metrics, e.g., a 128% improvement in SSIM and an 81% improvement in spatiotemporal metrics. Our project is available at https://github.com/gongzix/NeuroClips.

48. Almost-Linear RNNs Yield Highly Interpretable Symbolic Codes in Dynamical Systems Reconstruction

Manuel Brenner, Christoph Jürgen Hemmer, Zahra Monfared, Daniel Durstewitz

rating : 7.33 - [8, 7, 7] - Poster

tl;dr: We introduce Almost-Linear Recurrent Neural Networks (AL-RNNs) to derive highly interpretable piecewise-linear models of dynamical systems from time-series data.

Dynamical systems theory (DST) is fundamental for many areas of science and engineering. It can provide deep insights into the behavior of systems evolving in time, as typically described by differential or recursive equations. A common approach to facilitate mathematical tractability and interpretability of DS models involves decomposing nonlinear DS into multiple linear DS combined by switching manifolds, i.e. piecewise linear (PWL) systems. PWL models are popular in engineering and a frequent choice in mathematics for analyzing the topological properties of DS. However, hand-crafting such models is tedious and only possible for very low-dimensional scenarios, while inferring them from data usually gives rise to unnecessarily complex representations with very many linear subregions. Here we introduce Almost-Linear Recurrent Neural Networks (AL-RNNs) which automatically and robustly produce most parsimonious PWL representations of DS from time series data, using as few PWL nonlinearities as possible. AL-RNNs can be efficiently trained with any SOTA algorithm for dynamical systems reconstruction (DSR), and naturally give rise to a symbolic encoding of the underlying DS that provably preserves important topological properties. We show that for the Lorenz and Rössler systems, AL-RNNs derive, in a purely data-driven way, the known topologically minimal PWL representations of the corresponding chaotic attractors. We further illustrate on two challenging empirical datasets that interpretable symbolic encodings of the dynamics can be achieved, tremendously facilitating mathematical and computational analysis of the underlying systems.

Qi Song, Tianxiang Gong, Shiqi Gao, Haoyi Zhou, Jianxin Li

rating : 7.33 - [7, 7, 8] - Poster

tl;dr: We propose QUEST, a novel framework with quaternion objectives and constraints to both capture shared and unique information.

Multimodal contrastive learning (MCL) has recently demonstrated significant success across various tasks. However, the existing MCL treats all negative samples equally and ignores the potential semantic association with positive samples, which limits the model's ability to achieve fine-grained alignment. In multi-view scenarios, MCL tends to prioritize shared information while neglecting modality-specific unique information across different views, leading to feature suppression and suboptimal performance in downstream tasks. To address these limitations, we propose a novel contrastive framework name *QUEST: Quadruple Multimodal Contrastive Learning with Constraints and Self-Penalization*. In the QUEST framework, we propose quaternion contrastive objectives and orthogonal constraints to extract sufficient unique information. Meanwhile, a shared information-guided penalization is introduced to ensure that shared information does not excessively influence the optimization of unique information. Our method leverages quaternion vector spaces to simultaneously optimize shared and unique information. Experiments on multiple datasets show that our method achieves superior performance in multimodal contrastive learning benchmarks. On public benchmark, our approach achieves state-of-the-art performance, and on synthetic shortcut datasets, we outperform existing baseline methods by an average of 97.95\% on the CLIP model.

Steve Hanneke, Amin Karbasi, Shay Moran, Grigoris Velegkas

rating : 7.33 - [7, 7, 8] - Poster

tl;dr: We provide a complete characterization of the universal rates landscape of active learning.

In this work we study the problem of actively learning binary classifiers

from a given concept class, i.e., learning by utilizing unlabeled data

and submitting targeted queries about their labels to a domain expert.

We evaluate the quality of our solutions by considering the learning curves

they induce, i.e., the rate of decrease

of the misclassification probability as the number of label queries

increases. The majority of the literature on active learning has

focused on obtaining uniform guarantees on the error rate which are

only able to explain the upper envelope of the learning curves over families

of different data-generating distributions. We diverge from this line of

work and we focus on the distribution-dependent framework of universal

learning whose goal is to obtain guarantees that hold for any fixed distribution,

but do not apply uniformly over all the distributions. We provide a

complete characterization of the optimal learning rates that are achievable

by algorithms that have to specify the number of unlabeled examples they

use ahead of their execution. Moreover, we identify combinatorial complexity

measures that give rise to each case of our tetrachotomic characterization.